Why AI-powered security tools are your secret weapon against tomorrow's attacks

It's an age-old adage of cyber defense that an attacker has to find just one weakness or exploit, but the defender has to defend against everything. The challenge of AI, when it comes to cybersecurity, is that it is an arms race in which weapons-grade AI capabilities are available to both attackers and defenders.

Cisco is one of the world's largest networking companies. As such, it is on the front lines of defending against AI-powered cyberattacks.

Also: 4 expert security tips for navigating AI-powered cyber threats

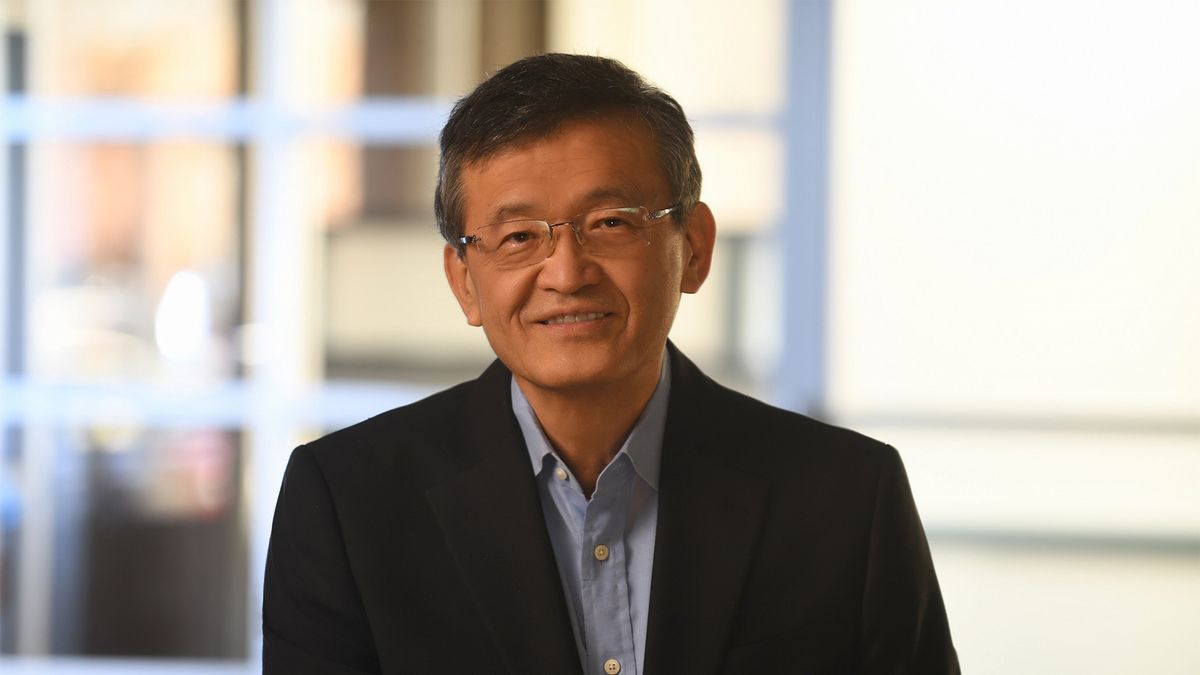

In this exclusive interview, ZDNET sits down with Cisco's AI products VP, Anand Raghavan, to discuss how AI-powered tools are revolutionizing cybersecurity and expanding organizations' attack surfaces.

ZDNET: Can you briefly introduce yourself and describe your role at Cisco?

Anand Raghavan: I'm Anand Raghavan, VP Products, AI for the AI Software and Platforms Group at Cisco. We focus on working with product teams across Cisco to bring together transformative, safe, and secure Gen AI-powered products to our customers.

Two products that we launched in the recent past are the Cisco AI Assistant which makes it easy for our customers to interact with our products using natural language, and Cisco AI Defense which enables safe and secure use of AI for employees and for cloud applications that organizations build for their customers.

ZDNET: How is AI transforming the nature of threats enterprises and governments face at the network level?

AR: AI has completely changed the game for network security, enabling hackers to launch more sophisticated and less time-intensive attacks. They're using automation to launch more personalized and effective phishing campaigns, which means employees may be more likely to fall for phishing attempts.

We're seeing malware that uses AI to adapt to avoid detection from traditional network security tools. As AI tools become more common, they expand the attack surface that security teams need to manage and they exacerbate the existing problem of shadow IT.

Just as companies have access to AI to build new and interesting applications, bad actors have access to the same sets of technologies to create new attacks and threats. It has become more important than ever to use the latest in advancements in AI to be able to identify these new kinds of threats and to automate the remediation of these threats.

Also: The head of US AI safety has stepped down. What now?

Whether it is malicious connections that can be stopped in real-time in the encrypted domain within our firewalls using our Encrypted Visibility Engine technology, or our language-based detectors of fraudulent emails in our Email Threat Defense product, it has become critical to understand the new attack surface of threats and how to protect against them.

With the advent of customer-facing AI applications, models and model-related vulnerabilities have become critical new attack surfaces. AI models can be the target of threats. Prompt injection or denial of service attacks may inadvertently leak sensitive data. The security industry has responded quickly to incorporate AI into solutions to spot unusual patterns and detect suspicious network activity. but it's a race to stay one step ahead.

ZDNET: How do AI-driven tools help enterprises stay ahead of increasingly sophisticated cyber adversaries?

AR: In an evolving threat landscape, AI-powered security tools deliver continuous and self-optimizing monitoring at a scale that manual monitoring can't match.

Using AI, a security team can analyze data from various sources across a company's entire ecosystem and detect unusual patterns or suspicious traffic that could indicate a data breach. Because AI analyzes this data more quickly than humans, organizations can respond to incidents in near real-time to mitigate potential threats.

Also: What is DeepSeek AI? Is it safe? Here's everything you need to know

When it comes to threat monitoring and detection, AI offers security professionals a "better together" scenario where the human professionals get visibility and response times with the AI that they wouldn't be able to achieve solo.

In a world where experienced top-level Tier 3 analysts in the SOC [security operations center] are harder to find, AI can be an integral part of an organization's strategy to aid and assist Tier 1 and Tier 2 analysts in their jobs and drastically reduce their mean time to remediation for any new discovered incidents and threats.

Workflow automation for XDR [extended detection and response] using AI will help enterprises stay ahead of cyber adversaries.

ZDNET: Explain AI Defense, and what is the main problem it aims to solve?

AR: When you think about how quickly people have adopted AI applications, it's off the charts. Within organizations, however, AI development and adoption isn't moving as quickly as it could be because people still aren't sure it's safe or they aren't confident they can keep it secure.

According to Cisco's 2024 AI Readiness Index, only 29% of organizations feel fully equipped to detect and prevent unauthorized tampering with AI. Companies can't afford to risk security by moving too quickly, but they also can't risk being lapped by their competition because they didn't embrace AI.

Also: Tax scams are getting sneakier - 10 ways to protect yourself before it's too late

AI Defense enables and safeguards AI transformation within enterprises, so they don't have to make this tradeoff. In the future, there will be AI companies and companies that are irrelevant.

Thinking about this challenge at a high level, AI poses two overarching risks to an enterprise. The first is the risk of sensitive data exposure from employees misusing third-party AI tools. Any intellectual property or confidential information shared with an unsanctioned AI application is susceptible to leakage and exploitation.

The second risk is related to how businesses develop and deploy their own AI applications. AI models need to be protected from threats such as prompt injections or training data poisoning, so they continue to operate the way that they are intended and are safe for customers to use.

Also: AI is changing cybersecurity and businesses must wake up to the threat

Cisco AI Defense addresses both areas of AI risk. Our AI Access solution gives security teams a comprehensive view of third-party AI applications in use and enables them to set policies that limit sensitive data sharing or restrict access to unsanctioned tools.

For businesses developing their own AI applications, AI Defense uses algorithmic red team technology to automate vulnerability assessments for models.

After identifying these risks in seconds, AI Defense provides runtime guardrails to keep AI applications protected against threats like prompt injections, data extraction, and denial of service in real-time.

ZDNET: How does AI Defense differentiate itself from existing security frameworks?

AR: The safety and security of AI is a massive new challenge that enterprises are only just beginning to contend with. After all, AI is fundamentally different from traditional applications and existing security frameworks don't necessarily apply in the same ways.

AI Defense is purpose-built to protect enterprises from the risks of AI application usage and development. Our solution is built on Cisco's own custom AI models with two main principles: continuous AI validation and protection at scale.

Also: That weird CAPTCHA could be a malware trap - here's how to protect yourself

When it comes to securing traditional applications, companies use a red team of human security professionals to try to jailbreak the app and find vulnerabilities. This approach doesn't provide anywhere near the scale needed to validate non-deterministic AI models. You'd need teams of thousands working for weeks.

This is why AI Defense uses an algorithmic red teaming solution that continuously monitors for vulnerabilities and recommends guardrails when it finds them. Cisco's platform approach to security means that these guardrails are distributed across the network and the security team gets total visibility across their AI footprint.

ZDNET: What is Cisco's vision for integrating AI Defense with broader enterprise security strategies?

AR: Cisco's 2024 AI Readiness Index showed that while organizations face mounting pressure to adopt AI, most organizations are still not ready to capture AI's potential and many lack awareness around AI security risks.

With solutions like AI Defense, Cisco is enabling organizations to unlock the benefits of AI and do so securely. Cisco AI Defense is designed to address the security challenges of a multi-cloud, multi-model world in which organizations operate.

Also: How Cisco, LangChain, and Galileo aim to contain 'a Cambrian explosion of AI agents'

It gives security teams visibility and control over AI applications and is frictionless for developers, saving them time and resources so they can focus on innovating.

When an organization is looking to adopt AI, both for employees and to build customer-facing applications, their adoption lifecycle has the following steps:

- Visibility: Understand what tools are being used by employees, or what models are being deployed in their cloud environments.

- Validation: Monitor and validate models running in their cloud environments and assess their vulnerabilities and identify guardrails as compensating controls for these vulnerabilities.

- Runtime protection: When these models get deployed in production, monitor all prompts and responses, and apply safety, security, privacy, and relevance guardrails to these prompts and responses to ensure that their customers have a safe and secure experience interacting with these cloud applications.

These are the core areas that AI Defense supports as part of its capabilities. Enforcement can happen in a Secure Access or SASE [secure access service edge] product for employee protection, and enforcement for cloud applications can happen in a Cloud Protection Suite application like Cisco Multicloud Defense.

ZDNET: What strategies should enterprises adopt to mitigate the risks of adversarial attacks on AI systems?

AR: AI applications introduce a new class of security risks to an organization's tech stack. Unlike traditional apps, AI apps include models, which are unpredictable and non-deterministic. When models don't behave as they are supposed to, they can result in hallucinations and other unintended consequences. Models can also fall victim to attacks like training data poisoning, prompt injection, and jailbreaking.

Model builders and developers will both have security layers in place for AI models, but in a multi-cloud, multi-model system, there will be inconsistent safety and security standards. To protect against AI tampering and the risk of data leakage, organizations need a common substrate of security across all clouds, apps, and models.

Also: This powerful firewall delivers enterprise-level security at a home office price

This becomes even more important when you have fragmented accountability across stakeholders -- model builders, app builders, governance, risk, and compliance teams.

Having a common substrate in terms of an AI security product that can monitor and enforce the right set of guardrails that protect across all categories of AI safety and security as outlined by standards such as MITRE ATLAS and OWASP LLM10 and NIST RMF becomes vital.

ZDNET: Could you share a real-world scenario or case study where AI Defense could prevent a critical security breach?

AR: As I mentioned, AI Defense covers the two main areas of enterprise AI risk: the usage of third-party AI tools and the development of new AI applications. Let's look at incident scenarios for each of these use cases.

In the first scenario, an employee shares information about some of your customers with an unsanctioned AI assistant for help preparing a presentation. This confidential data can become codified in the AI's retraining data, meaning it can be shared with other public users. AI Defense can limit this data sharing or restrict access to the unsanctioned tool entirely, mitigating the risk of what would otherwise be a devastating privacy violation.

Also: The best malware removal software: Expert tested and reviewed

In the second scenario, an AI developer uses an open-source foundation model to create an AI customer service assistant. They fine-tune it for relevance but inadvertently weaken its built-in guardrails. Within days, it's hallucinating incorrect responses and becoming more susceptible to adversarial attack. With continuous monitoring and vulnerability testing, AI Defense would identify the flaw in the model and apply your preferred guardrails automatically.

ZDNET: What emerging trends in AI security do you foresee shaping the future of cybersecurity?

AR: One critical aspect of AI in security is that we're seeing exploit times decrease. Security professionals have a shorter window than ever between when a vulnerability is discovered and when it is exploited by attackers.

As AI makes cybercriminals faster and their attacks more efficient, it's increasingly urgent that organizations detect and patch vulnerabilities quickly. AI can significantly speed up the detection of vulnerabilities so security teams can respond in real time.

Also: Most people worry about deepfakes - and overestimate their ability to spot them

Deepfakes are going to be a massive security concern over the next five years. In many ways, the security industry is just getting ready for deepfakes and how to defend against them, but this will be a critical area of vulnerability and risk for organizations.

The same way denial-of-service attacks were a major concern 10 years ago and ransomware has been a critical threat in more recent years, deepfakes are going to keep a lot of security professionals up at night.

ZDNET: How can governments and enterprises collaborate to build robust AI security standards?

AR: By working together, governments and the private sector can tap into a deep pool of knowledge and wide spectrum of perspectives to develop best practices in a quickly evolving risk landscape of AI and security.

Last year, Cisco worked with the Cybersecurity and Infrastructure Security Agency's (CISA) Joint Cyber Defense Collaborative (JCDC), which brought together industry leaders from some of the biggest players in tech, such as OpenAI, Amazon, Microsoft, and Nvidia, and government agencies to collaborate with the goal of enhancing organizations' collective ability to respond to AI-related security incidents.

Also: When you should use a VPN - and when you shouldn't

We participated in a tabletop exercise and collaborated on the recently released "AI Security Incident Collaboration Playbook," which is a guide for collaboration between government and private industry.

It offers practical, actionable advice for responding to AI-related security incidents and guidance on voluntarily sharing information related to vulnerabilities associated with AI systems.

Together, government and the private sector can raise awareness of security risks facing this critical technology.

ZDNET: How do you see AI bridging the gap between cyberattack prevention and incident response?

AR: We're already seeing AI-enabled security solutions deliver continuous and scalable monitoring that helps human security teams detect suspicious network activity and vulnerabilities.

We're in the stage where AI is an invaluable tool that gives security professionals better visibility and recommendations on how to respond to security incidents.

Also: Why OpenAI's new AI agent tools could change how you code

Eventually, we'll reach a point where AI can automatically deploy and implement security patches with oversight from a human security professional. The benefits, in a nutshell, are continuity (always monitoring), scalability (as your attack surface grows, AI helps you manage it), accuracy (AI can detect even more subtle indicators that a human might miss), and speed (faster than manual review).

Are you prepared?

AI is transforming cybersecurity, but are enterprises truly prepared for the risks it brings? Have you encountered AI-driven cyber threats in your organization?

Do you think AI-powered security solutions can stay ahead of increasingly sophisticated attacks? How do you see the balance between AI as a security tool and a potential vulnerability?

Are companies doing enough to secure their AI models from exploitation? Let us know in the comments below.

You can follow my day-to-day project updates on social media. Be sure to subscribe to my weekly update newsletter, and follow me on Twitter/X at @DavidGewirtz, on Facebook at Facebook.com/DavidGewirtz, on Instagram at Instagram.com/DavidGewirtz, on Bluesky at @DavidGewirtz.com, and on YouTube at YouTube.com/DavidGewirtzTV.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

:quality(85):upscale()/2025/03/12/764/n/1922729/35f51b5a67d1c289508700.05751786_.jpg)

:quality(85):upscale()/2025/03/12/768/n/1922729/97d8922c67d1c3de760668.45117389_.jpg)

:quality(85):upscale()/2025/03/11/836/n/49352476/9ec5f53567d0894c872ef5.91564818_.png)

:quality(85):upscale()/2025/03/11/821/n/49351758/tmp_vlUKOq_9b7c7998fe9b52c1_Main_PS25_03_Identity_LoveAtSauna_1456x970.jpg)