5 ways to use generative AI more safely - and effectively

It's increasingly difficult to avoid artificial technology (AI) as it becomes more commonplace. A prime example is Google searches showcasing AI responses. AI safety is more important than ever in this age of technological ubiquity. So as an AI user, how can you safely use generative AI (Gen AI)?

Also: Gemini might soon have access to your Google Search history - if you let it

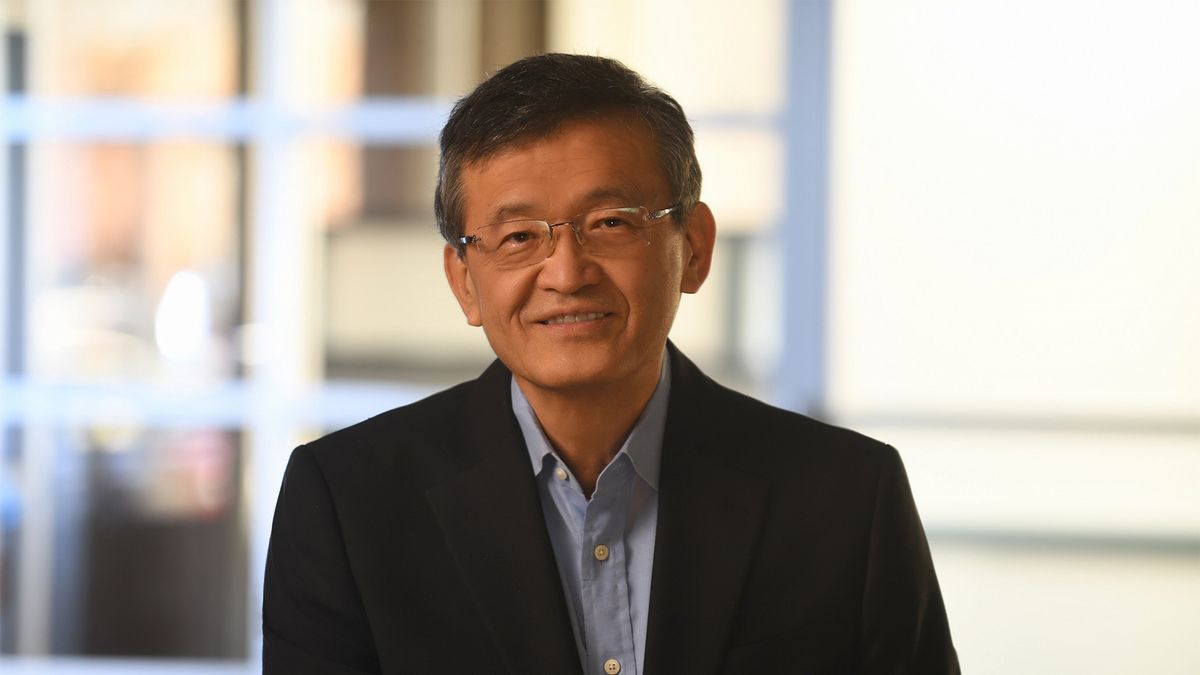

Carnegie Mellon School of Computer Science assistant professors Maarten Sap and Sherry Tongshuang Wu took to the SXSW stage to inform people about the shortcomings of large language models (LLMs), the type of machine learning model behind popular generative AI tools, such as ChatGPT, and how people can exploit these technologies more effectively.

"They are great, and they are everywhere, but they are actually far from perfect," said Sap.

Also: Can AI supercharge creativity without stealing from artists?

The tweaks you can implement into your everyday interactions with AI are simple. They will protect you from AI's shortcomings and help you get more out of AI chatbots, including more accurate responses. Keep reading to learn about the five things you can do to optimize your AI use, according to the experts.

1. Give AI better instructions

Because of AI's conversational capabilities, people often use underspecified, shorter prompts, like chatting with a friend. The problem is that when under instructions, AI systems may infer the meaning of your text prompt incorrectly, as they lack the human skills that would allow them to read between the lines.

To illustrate this issue, in their session, Sap and Wu told a chatbot they were reading a million books, and the chatbot took it literally instead of understanding the person was superfluous. Sap shared that in his research he found that modern LLMs struggle to understand non-literal references in a literal way over 50% of the time.

Also: Google claims Gemma 3 reaches 98% of DeepSeek's accuracy - using only one GPU

The best way to circumvent this issue is to clarify your prompts with more explicit requirements that leave less room for interpretation or error. Wu suggested thinking of chatbots as assistants, instructing them clearly about exactly what you want done. Even though this approach might require more work when writing a prompt, the result should align more with your requirements.

2. Double-check your responses

If you have ever used an AI chatbot, you know they hallucinate, which describes outputting incorrect information. Hallucinations can happen in different ways, either outputting factually incorrect responses, incorrectly summarizing given information, or agreeing with false facts shared by a user.

Sap said hallucinations happen between 1% and 25% of the time for general, daily use cases. The hallucination rates are even higher for more specialized domains, such as law and medicine, coming in at greater than 50%. These hallucinations are difficult to spot because they are presented in a way that sounds plausible, even if they are nonsensical.

The models often reaffirm their responses, using markers such as "I am confident" even when offering incorrect information. A research paper cited in the presentation said AI models were certain yet incorrect about their responses 47% of the time.

As a result, the best way to protect against hallucinations is to double-check your responses. Some tactics include cross-verifying your output with external sources, such as Google or news outlets you trust, or asking the model again, using different wording, to see if the AI outputs the same response.

Also: AI agents aren't just assistants: How they're changing the future of work today

Although it can be tempting to get ChatGPT's assistance with subjects you don't know much about, it is easier to identify errors if your prompts remain within your domain of expertise.

3. Keep the data you care about private

Gen AI tools are trained on large amounts of data. They also require data to continue learning and become smarter, more efficient models. As a result, models often use their outputs for further training.

Also: This new AI benchmark measures how much models lie

The issue is that models often regurgitate their training data in their responses, meaning your private information could be used in someone else's responses, exposing your private data to others. There is also a risk when using web applications because your private information is leaving your device to be processed in the cloud, which has security implications.

The best way to maintain good AI hygiene is to avoid sharing sensitive or personal data with LLMs. There will be some instances where the assistance you want may involve using personal data. You can also redact this data to ensure you get help without the risk. Many AI tools, including ChatGPT, have options that allow users to opt out of data collection. Opting out is always a good option, even if you don't plan on using sensitive data.

4. Watch how you talk about LLMs

The capabilities of AI systems and the ability to talk to these tools using natural language have led some people to overstimate the power of these bots. Anthropomorphism, or the attribution of human characteristics, is a slippery slope. If people think of these AI systems as human-adjacent, they may trust them with more responsibility and data.

Also: Why OpenAI's new AI agent tools could change how you code

One way to help mitigate this issue is to stop attributing human characteristics to AI models when referring to them, according to the experts. Instead of saying, "the model thinks you want a balanced response," Sap suggested a better alternative: "The model is designed to generate balanced responses based on its training data."

5. Think carefully about when to use LLMs

Although it may seem like these models can help with almost every task, there are many instances in which they may not be able to provide the best assistance. Although benchmarks are available, they only cover a small proportion of how users interact with LLMs.

Also: Even premium AI tools distort the news and fabricate links - these are the worst

LLMs may also not work the best for everyone. Beyond the hallucinations discussed above, there have been recorded instances in which LLMs make racist decisions or support Western-centric biases. These biases show models may be unfit to assist in many use cases.

As a result, the solution is to be thoughtful and careful when using LLMs. This approach includes evaluating the impact of using an LLM to determine whether it is the right solution to your problem. It is also helpful to look at what models excel at certain tasks and to employ the best model for your requirements.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

:quality(85):upscale()/2025/03/12/764/n/1922729/35f51b5a67d1c289508700.05751786_.jpg)

:quality(85):upscale()/2025/03/12/768/n/1922729/97d8922c67d1c3de760668.45117389_.jpg)

:quality(85):upscale()/2025/03/11/836/n/49352476/9ec5f53567d0894c872ef5.91564818_.png)

:quality(85):upscale()/2025/03/11/821/n/49351758/tmp_vlUKOq_9b7c7998fe9b52c1_Main_PS25_03_Identity_LoveAtSauna_1456x970.jpg)